deepstream教學

客製化模型實作nvinfer介面

nvinfer呼叫介面

任何客製化介面最終必須被編譯成一個獨立的shared library。nvinfer在執行期間利用dlopen()呼叫函式庫,並且利用dlsym()呼叫函式庫中的函式。進一步的資訊紀錄在nvdsinfer_custom_impl.h裡面https://docs.nvidia.com/metropolis/deepstream/sdk-api/nvdsinfer__custom__impl_8h.html

客製化Output Parsing

對於detectors使用者必須自行解析模型的輸出並且將之轉化成bounding box 座標和物件類別。對於classifiers則是必須自行解析出物件屬性。範例在

/opt/nvidia/deepstream/deepstream/sources/libs/nvdsinfer_customparser,裡面的README有關於使用custom parser的說明。客製化parsing function必須為

NvDsInferParseCustomFunc型態。在nvdsinfer_custom_impl.h的221行可以看到下面的型態定義,代表每一個客製化的解析函式都必須符合這個格式1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16/**

* Type definition for the custom bounding box parsing function.

*

* @param[in] outputLayersInfo A vector containing information on the output

* layers of the model.

* @param[in] networkInfo Network information.

* @param[in] detectionParams Detection parameters required for parsing

* objects.

* @param[out] objectList A reference to a vector in which the function

* is to add parsed objects.

*/

typedef bool (* NvDsInferParseCustomFunc) (

std::vector<NvDsInferLayerInfo> const &outputLayersInfo,

NvDsInferNetworkInfo const &networkInfo,

NvDsInferParseDetectionParams const &detectionParams,

std::vector<NvDsInferObjectDetectionInfo> &objectList);客製化parsing function可以在

Gst-nvinfer的參數檔parse-bbox-func-name和custom-lib-name屬性指定。例如我們定義了Yolov2-tiny的客製化bounding box解析函式NvDsInferParseCustomYoloV2Tiny,編譯出來的shared library位於nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so,我們在設定檔就就必須要有以下設定1

2parse-bbox-func-name=NvDsInferParseCustomYoloV2Tiny

custom-lib-path=nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

可以藉由在定義函式後呼叫CHECK_CUSTOM_PARSE_FUNC_PROTOTYPE()marco來驗證函式的定義。

使用範例如下

1 | extern "C" bool NvDsInferParseCustomYoloV2Tiny( |

https://forums.developer.nvidia.com/t/deepstreamsdk-4-0-1-custom-yolov3-tiny-error/108391?u=jenhao

IPlugin Implementation

對於TensorRT不支援的network layer,Deepstream提供IPlugin interface來客製化處理。在/opt/nvidia/deepstream/deepstream/sources底下的objectDetector_SSD, objectDetector_FasterRCNN, 和 objectDetector_YoloV3資料夾展示了如何使用custom layers。

在objectDetector_YoloV3範例中我們可以看到如何製作Tensorrt不支援的Yolov3的yolo layer。可以在yolo.cpp中看到自定義的layer是如何被呼叫使用的,程式節錄如下。

1 | .... |

而其他版本的YOLO,Nvidia也已經幫我們建立好許多Plugin,例如yolov2的region layer,Nvidia已經幫我們建立,其他已經建立好的layer可以在這裡找到。

https://github.com/NVIDIA/TensorRT/tree/1c0e3fdd039c92e584430a2ed91b4e2612e375b8/plugin

畫出範例的結構圖

首先在~/.bashrc加入下面這行設定pipeline圖儲存的位置,注意GStreamer不會幫你建立資料夾,你必須確認資料夾存在

1 | export GST_DEBUG_DUMP_DOT_DIR=/tmp |

接下來在pipeline 狀態設為PLAYING之前加入下面這行程式

1 | GST_DEBUG_BIN_TO_DOT_FILE(pipeline, GST_DEBUG_GRAPH_SHOW_ALL, "dstest1-pipeline"); |

最後執行程式後就會產生.dot在前面設定的資料夾,你可以下載Graphviz,或是用VScode的插件來看圖

Deepstream 說明書

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_plugin_gst-nvdsxfer.html

gst-launch-1.0建立rtsp輸入源的pipeline

首先先用gst-launch-1.0建立一個簡單的rtsp輸入、螢幕輸出的pipeline

1 | gst-launch-1.0 rtspsrc location='rtsp://192.168.1.10:554/user=admin_password=xxxxxx_channel=1_stream=0.sdp' ! rtph264depay ! h264parse ! nvv4l2decoder ! nvvideoconvert ! video/x-raw,format=BGRx ! videoconvert ! video/x-raw,format=BGR ! autovideosink |

將python的範例程式轉成c++

https://github.com/NVIDIA-AI-IOT/deepstream_python_apps/tree/master/apps/deepstream-rtsp-in-rtsp-out

建立mjpeg串流

- 指令方式https://stackoverflow.com/questions/59885450/jpeg-live-stream-in-html-slow

1

2

3

4gst-launch-1.0 -v rtspsrc location="rtsp://<rtsp url>/live1.sdp" \

! rtph264depay ! avdec_h264 \

! timeoverlay halignment=right valignment=bottom \

! videorate ! video/x-raw,framerate=37000/1001 ! jpegenc ! multifilesink location="snapshot.jpeg"

查詢deepstream bin的說明

1 | gst-inspect-1.0 nvurisrcbin |

gst-launch-1.0輸出除錯訊息到檔案

參考:

https://www.cnblogs.com/xleng/p/12228720.html

1 | GST_DEBUG_NO_COLOR=1 GST_DEBUG_FILE=pipeline.log GST_DEBUG=5 gst-launch-1.0 -v rtspsrc location="rtsp://192.168.8.19/live.sdp" user-id="root" user-pw="3edc\$RFV" ! rtph264depay ! avdec_h264 ! timeoverlay halignment=right valignment=bottom ! videorate ! video/x-raw,framerate=37000/1001 ! jpegenc ! multifilesink location="snapshot.jpeg" |

參考:

https://gstreamer.freedesktop.org/documentation/tutorials/basic/debugging-tools.html?gi-language=c

https://embeddedartistry.com/blog/2018/02/22/generating-gstreamer-pipeline-graphs/

本地端觀看udp傳送影像

host設為本機ip或127.0.0.1

send:

1 | gst-launch-1.0 -v videotestsrc ! x264enc tune=zerolatency bitrate=500 speed-preset=superfast ! rtph264pay ! udpsink port=5000 host=$HOST |

receive:

1 | gst-launch-1.0 -v udpsrc port=5000 ! "application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)H264, payload=(int)96" ! rtph264depay ! h264parse ! avdec_h264 ! videoconvert ! autovideosink |

Glibs說明書

http://irtfweb.ifa.hawaii.edu/SoftwareDocs/gtk20/glib/glib-hash-tables.html#g-int-hash

GDB文字圖形介面

https://blog.louie.lu/2016/09/12/gdb-%E9%8C%A6%E5%9B%8A%E5%A6%99%E8%A8%88/

範例

https://gist.github.com/liviaerxin/bb34725037fd04afa76ef9252c2ee875#tips-for-debug

rtsp 元件nvrtspoutsinkbin

nvrtspoutsinkbin沒有說明書,只能用gst-inspect-1.0看

https://forums.developer.nvidia.com/t/where-can-fine-nvrtspoutsinkbin-info/199124

範例

/opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream_reference_apps/deepstream-bodypose-3d/sources/deepstream_pose_estimation_app.cpp

1 | /* Create RTSP output bin */ |

取得source id

https://forums.developer.nvidia.com/t/how-to-get-sources-index-in-deepstream/244461

可以用prob取得meta data

deepstream_test3_app.c 有範例

切換輸入源

https://forums.developer.nvidia.com/t/how-switch-camera-output-gst-nvmultistreamtiler/233062

1 | tiler_sink_pad.add_probe(Gst.PadProbeType.BUFFER, tiler_sink_pad_buffer_probe, 0) |

/opt/nvidia/deepstream/deepstream/sources/apps/apps-common/src/deepstream-yaml/deepstream_source_yaml.cpp有範例

斷線重連

rust的插件(可能可以編譯成c函式庫)

https://coaxion.net/blog/2020/07/automatic-retry-on-error-and-fallback-stream-handling-for-gstreamer-sources/

https://gitlab.freedesktop.org/gstreamer/gst-plugins-rs/-/tree/master/utils/fallbackswitch

RUST說明書

https://rust-lang.tw/book-tw/ch01-03-hello-cargo.html

截出有物件的圖

https://forums.developer.nvidia.com/t/saving-frame-with-detected-object-jetson-nano-ds4-0-2/121797/3

關閉Ubuntu圖形介面

https://linuxconfig.org/how-to-disable-enable-gui-on-boot-in-ubuntu-20-04-focal-fossa-linux-desktop

關閉使用gpu的資源

https://heary.cn/posts/Linux环境下重装NVIDIA驱动报错kernel-module-nvidia-modeset-in-use问题分析/

發現nvidia smi persistence mode會占用GPU資源,必須釋放掉才能安裝新的driver

可以用nvidia-smi的指令關掉https://docs.nvidia.com/deploy/driver-persistence/index.html#usage

1 | nvidia-smi -pm 0 |

移除舊的driver

1 | apt-get remove --purge nvidia-driver-520 |

queue的用途

probe

https://coaxion.net/blog/2014/01/gstreamer-dynamic-pipelines/

https://erit-lvx.medium.com/probes-handling-in-gstreamer-pipelines-3f96ea367f31

deepstream-test4 用prob取得metadata的範例

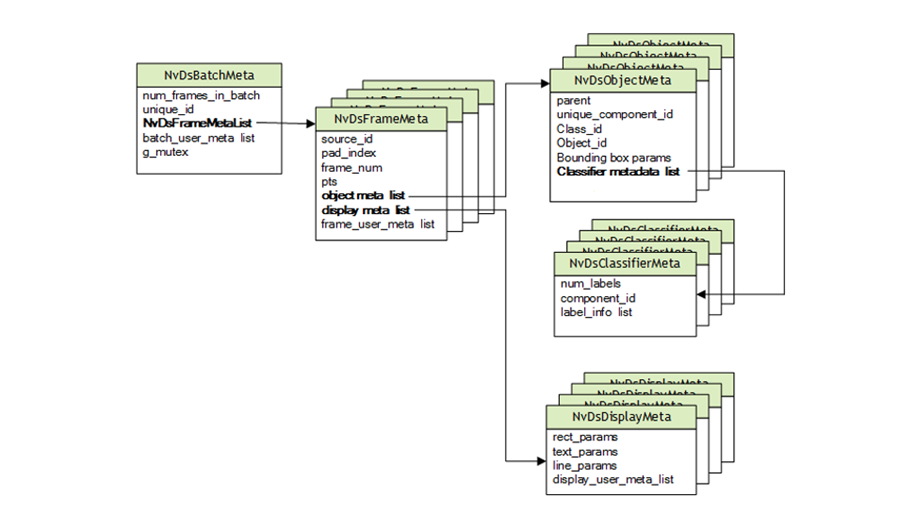

NvDsBatchMeta資料圖:

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_plugin_metadata.html

1 | static GstPadProbeReturn |

NvDsObjEncUsrArgs參數的功用

- bool isFrame : 告訴encoder要編碼整張照片還是編碼每一個偵測物件的截圖。

- 1: Encodes the entire frame.

- 0: Encodes object of specified resolution.

- bool saveImg : 會直接儲存一張照片到當前資料夾

- bool attachUsrMeta :

- 決定是否加上NVDS_CROP_IMAGE_META metadata

Deepstream截圖,以deepstream_image_meta_test為例

注意:

根據文件nvds_obj_enc_process是一個非阻塞的函式,使用者必須呼叫nvds_obj_enc_finish()以確保所有的圖片都已經確實被處理完成。

第一步,設定要儲存照片的條件並且encode成jpg檔

1 | /* pgie_src_pad_buffer_probe will extract metadata received on pgie src pad |

第二步,檢查usrMetaData是否的meta_type是不是NVDS_CROP_IMAGE_META

如果發現是NVDS_CROP_IMAGE_META,就儲存照片

1 | /* osd_sink_pad_buffer_probe will extract metadata received on OSD sink pad |

加入自己客製的的metadata

參考deepstream-user-metadata-test範例的nvinfer_src_pad_buffer_probe

有四個東西需要使用者自行提供

- user_meta_data : pointer to User specific meta data

- meta_type : Metadata type that user sets to identify its metadata

- copy_func : Metadata copy or transform function to be provided when there is buffer transformation

- release_func : Metadata release function to be provided when it is no longer required.

這個範例添加一個亂數到metadata上面,以下是要達成這個目標要準備的函式

user_meta_data

1

2

3

4

5

6

7

8

9

10

11

12void *set_metadata_ptr()

{

int i = 0;

gchar *user_metadata = (gchar*)g_malloc0(USER_ARRAY_SIZE);

g_print("\n**************** Setting user metadata array of 16 on nvinfer src pad\n");

for(i = 0; i < USER_ARRAY_SIZE; i++) {

user_metadata[i] = rand() % 255;

g_print("user_meta_data [%d] = %d\n", i, user_metadata[i]);

}

return (void *)user_metadata;

}meta_type

記得要在在probe function裡面定義變數

1 | /** set the user metadata type */ |

- copy_func

1 | /* copy function set by user. "data" holds a pointer to NvDsUserMeta*/ |

- release_func

1 | /* release function set by user. "data" holds a pointer to NvDsUserMeta*/ |

- 新增一個probe把資料放入metadata

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43/* Set nvds user metadata at frame level. User need to set 4 parameters after

* acquring user meta from pool using nvds_acquire_user_meta_from_pool().

*

* Below parameters are required to be set.

* 1. user_meta_data : pointer to User specific meta data

* 2. meta_type: Metadata type that user sets to identify its metadata

* 3. copy_func: Metadata copy or transform function to be provided when there

* is buffer transformation

* 4. release_func: Metadata release function to be provided when it is no

* longer required.

*

* osd_sink_pad_buffer_probe will extract metadata received on OSD sink pad

* and update params for drawing rectangle, object information etc. */

static GstPadProbeReturn

nvinfer_src_pad_buffer_probe (GstPad * pad, GstPadProbeInfo * info,

gpointer u_data)

{

GstBuffer *buf = (GstBuffer *) info->data;

NvDsMetaList * l_frame = NULL;

NvDsUserMeta *user_meta = NULL;

NvDsMetaType user_meta_type = NVDS_USER_FRAME_META_EXAMPLE;

NvDsBatchMeta *batch_meta = gst_buffer_get_nvds_batch_meta (buf);

for (l_frame = batch_meta->frame_meta_list; l_frame != NULL;

l_frame = l_frame->next) {

NvDsFrameMeta *frame_meta = (NvDsFrameMeta *) (l_frame->data);

/* Acquire NvDsUserMeta user meta from pool */

user_meta = nvds_acquire_user_meta_from_pool(batch_meta);

/* Set NvDsUserMeta below */

user_meta->user_meta_data = (void *)set_metadata_ptr();

user_meta->base_meta.meta_type = user_meta_type;

user_meta->base_meta.copy_func = (NvDsMetaCopyFunc)copy_user_meta;

user_meta->base_meta.release_func = (NvDsMetaReleaseFunc)release_user_meta;

/* We want to add NvDsUserMeta to frame level */

nvds_add_user_meta_to_frame(frame_meta, user_meta);

}

return GST_PAD_PROBE_OK;

}

將客製化訊息傳換json以之後發送訊息

nvmsgconv的功能:

利用NVDS_EVENT_MSG_METAmetadata來產生JSON格式的”DeepStream Schema” payload。

所產生的payload會以NVDS_META_PAYLOAD的型態儲存到buffer。

除了NvDsEventMsgMeta定義的常用訊號結構,使用者還可以自訂客製化物件並加到NVDS_EVENT_MSG_METAmetadata。要加入自定義訊息NvDsEventMsgMeta提供”extMsg”和”extMsgSize”欄位。使用者可以把自定義的structure指針assign給”extMsg”,並且在”extMsgSize”指令資料大小。

以deepstream-test4為例,在這裡message放入了客製化訊息NvDsVehicleObject和NvDsPersonObject,如果想要客製化自己的訊息就必須要自己定義。

自製自己的客製化訊息

可以參考/opt/nvidia/deepstream/deepstream-6.2/sources/libs/nvmsgconv/deepstream_schema/eventmsg_payload.cpp參考客製化訊息如何定義轉換成json

nvmsgconv的原始碼

/opt/nvidia/deepstream/deepstream-6.2/sources/gst-plugins/gst-nvmsgconv

/opt/nvidia/deepstream/deepstream-6.2/sources/libs/nvmsgconv

- nvmsgconv開啟除錯功能

debug-payload-dir : Absolute path of the directory to dump payloads for debugging

- 以deepstream-test4為例,首先將模型的偵測結果

NvDsObjectMeta轉換成NvDsEventMsgMeta,在這步將訊息struct加到extMsg上 - 將製作好的

NvDsEventMsgMeta加進buffer裡面,metadata為NvDsUserMeta,在這一步也要指定meta_copy_func、meta_free_func

mvmsgbroker使用方法

以下將以rabbitmq為範例

安裝rabbitmq client

說明文件在/opt/nvidia/deepstream/deepstream/sources/libs/amqp_protocol_adaptor的readme.md1

2

3

4

5

6

7git clone -b v0.8.0 --recursive https://github.com/alanxz/rabbitmq-c.git

cd rabbitmq-c

mkdir build && cd build

cmake ..

cmake --build .

sudo cp librabbitmq/librabbitmq.so.4 /opt/nvidia/deepstream/deepstream/lib/

sudo ldconfig安裝rabbitmq server

1 | #Install rabbitmq on your ubuntu system: https://www.rabbitmq.com/install-debian.html |

- 設定連線詳細資訊

- 建立

cfg_amqp.txt連線資訊檔(/opt/nvidia/deepstream/deepstream/sources/libs/amqp_protocol_adaptor 有範例),並且傳給nvmsgbroker。內容範例如下

1 | [message-broker] |

- exchange: 預設的exchange是

amq.topic,可以更改成其他的 - Topic : 設定要發送的topic名稱

- share-connection : Uncommenting this field signifies that the connection created can be shared with other components within the same process.

- 直接將連線資訊傳給

msgapi_connect_ptr

1 | conn_handle = msgapi_connect_ptr((char *)"url;port;username;password",(nvds_msgapi_connect_cb_t) sample_msgapi_connect_cb, (char *)CFG_FILE); |

- 測試用程式

在/opt/nvidia/deepstream/deepstream/sources/libs/amqp_protocol_adaptor有測試用的程式test_amqp_proto_async.c和test_amqp_proto_sync.c,可以用來測試連線是否成功,編譯方式如下注意:1

2

3make -f Makefile.test

./test_amqp_proto_async

./test_amqp_proto_sync

- 你可能須要root權限才能在這個資料夾編譯程式

- libnvds_amqp_proto.so 位於 /opt/nvidia/deepstream/deepstream-

/lib/

- 測試和驗證發送出去的訊息

- 建立exchange , queue,並且將他們綁定在一起

1 | # Rabbitmq management: |

* 接收訊息

1 | #Install the amqp-tools |

* 執行consumer

1 | amqp-consume -q "myqueue" -r "topicname" -e "amq.topic" ./test_amqp_recv.sh |

混用c 和 c++ 程式

https://hackmd.io/@rhythm/HyOxzDkmD

https://embeddedartistry.com/blog/2017/05/01/mixing-c-and-c-extern-c/

async property

某些狀況下async property 設為true會讓pipeline卡住,還需要進一步了解原因

nvmsgconv 詳細payload設定

在/opt/nvidia/deepstream/deepstream/sources/libs/nvmsgconv/nvmsgconv.cpp裡面可以看到nvds_msg2p_ctx_create這個函式,是用來產出payload的函式。在nvmsgconv讀取的yaml檔裡面可以設定的group和屬性如下

sensor

- enable : 是否啟用這個sensor

- id : 對應NvDsEventMsgMeta的sensorId

- type :

- description

- location

- lat;lon;alt的格式

- coordinate

- x;y;z的格式

place

analytics

NvDsEventMsgMeta 轉換成json的詳細實作

在/opt/nvidia/deepstream/deepstream-6.2/sources/libs/nvmsgconv/deepstream_schema/eventmsg_payload.cpp這個程式裡,分別有sensor, place, analytics的轉換實作

客製化nvmsgconv payload

如果要客製化payload的話,可以參考/opt/nvidia/deepstream/deepstream-6.2/sources/libs/nvmsgconv/deepstream_schema/eventmsg_payload.cpp裡面的實作,並且加入自己需要的客製化payload。首先將整個/opt/nvidia/deepstream/deepstream-6.2/sources/libs/nvmsgconv複製到其他資料夾並且準備編譯環境

編譯環境

這裡介紹在Ubuntu20.04的桌上型主機上環境的配置方法,Jetson的環境配置方法可能略有不同。

- 下載並且編譯protobuf

在Ubuntu20.04下使用apt-get install protobuf 只會安裝到protobuf 3.6的版本,而許多標頭檔要到3.7以上才有,而且不能超過3.19,以免某些標頭檔又遺失。如果中間有步驟做錯,只要還沒make install,建議直接刪除protobuf的資料夾,重新下載並且編譯。

首先直接從github下載protobuf原始碼

1 | git clone https://github.com/protocolbuffers/protobuf.git |

切換版本到v3.19.6,並且更新submodule。

1 | cd protobuf |

編譯並且安裝,make check過程中如果有錯誤,編譯出來的程式可能會有部分功能遺失。

1 | ./configure |

編譯客製化的nvmsgconv

接下來進到nvmsgconv的資料夾,修改一下最後產出的lib檔案名稱和install的位置,然後用make指令編譯

預訓練模型

/opt/nvidia/deepstream/deepstream-6.2/samples/models/tao_pretrained_mod

els/trafficcamnet

usb相機

儲存影像

元件速度測量

https://forums.developer.nvidia.com/t/deepstream-sdk-faq/80236/12?u=jenhao